Brandon Ayoungchee

Arts & Features Writer

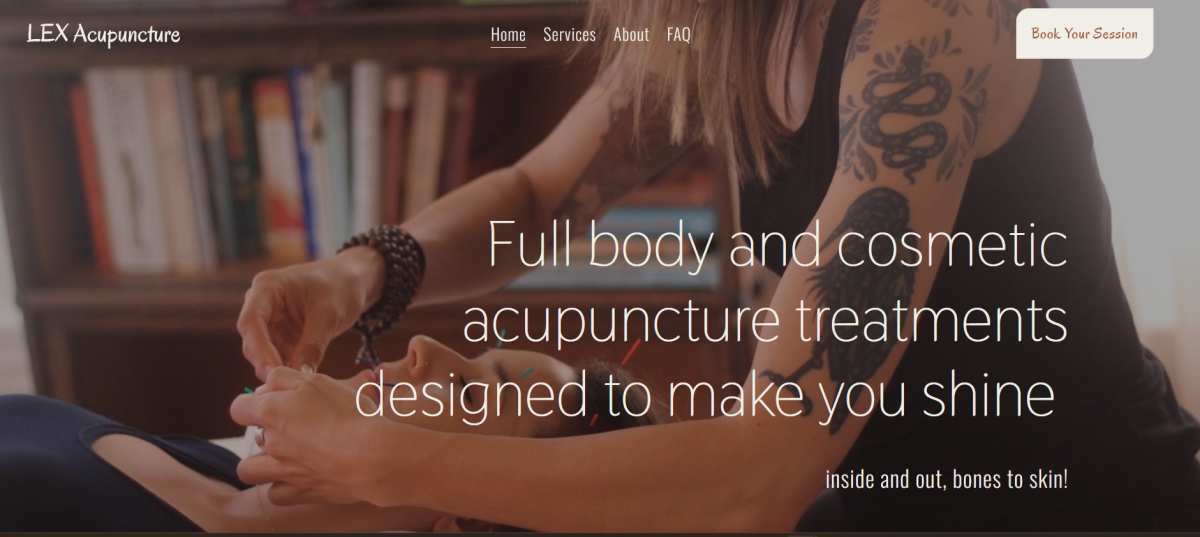

Marietta Cameron, chair of the Computer Science department, attended the virtual panel discussion of Coded Bias, bringing in her knowledge of Artificial Intelligence.

UNC Asheville professors host a virtual panel to discuss the documentary Coded Bias, a frightening window into bias coding in facial recognition technology.

Coded Bias explores this frightening reality due to an increasingly developing world around technology. The documentary follows M.I.T. Media Lab computer scientist Joy Buolamwini, along with data scientists, mathematicians and watchdog groups from all over the world, as they fight to expose the discrimination found within algorithms in facial recognition.

On March 18, Senior Lecturer Anne Slatton of the mass communication department hosted a virtual discussion of the film. Panelists included UNCA faculty William Bares, Marietta Cameron, Sarah Judson and Susan Reiser.

As someone who had watched the film, Slatton opened the panel by talking about her desire to share and discuss the movie.

“I watched the film with the Women’s Society of Film and I wanted to share and talk to you all about it,” she said.

The frightening aspect of Coded Bias, discussed among the panel, revealed society controls technology. Evil artificial intelligence is a possibility, but mainly we are in control of the robots we create.

“The thing of technology is that we create it, which this film highlights. There are not enough regulations and checks to be trustworthy in this science of robotic engineering,” said William Bares, an associate professor of music.

In the film, Amy Webb, an author interviewed for Coded Bias, talked about the importance of instilling the western democratic ideals into our A.I. as there is fear for A.I. surveillance to transform into a tool for an authoritarian government.

“I think Amy talked a lot about the top-down use of A.I. used by the police,” said Sarah Judson, an associate professor in history. “For example, that scene in Britain where people are being identified by the police and fined if they refuse to cooperate. I think she was objecting to the authoritarian nature of that. But I’m also referring to a desire for justice and less bias.”

When questioning if science was truly objective on any political or racial basis, Judson replied yes to bias being ingrained in facial recognition.

“Yes. The idea that science is objective is not entirely so objective. The space where we see this one truth, clearly, is reflective of a lot of biases,” Judson said.

Another point in the discussion brought up was if conservatism would control the bias in facial recognition. But it can be taken advantage of from both sides, according to Associate Professor Marietta Cameron. She said bias is a tool that can be used from both sides of the spectrum.

“I think the bias depicted in the documentary talks about both ends of the spectrum quite well. I think it depicts the conservative ideology and also depicts the liberal ideology too. They don’t show who is really less bias than the other,” Cameron said.

With skin color and gender threatened by the technology, those of transgender and non-binary identities should also be concerned. Judson speculated if this will create several categorized identities.

“It makes you think of everyone at risk with all these forms of categories that are not binary,” Judson said.

“Let me be clear and tell you something: everybody is a target,” Cameron replied, laughing.

Senior Adviser Susan Reiser mentioned how most identification systems refer to individuals as male.

“We see it every day in data. Usually the inferences these systems make, based on our data, assumes we are male. I buy a lot of little batteries because I use electronics on projects. The data assumes I can’t hear. When my children were little, I bought a lot of Polident to clean their retainers. It assumes I don’t have teeth. This data they keep collecting on us are inferences. This happens on the web. We have a ton of digital agritors collecting data and selling on it,” Resier said.

Slatton agreed, commenting on how individuals make these inferences without thinking. When people are in desperate need of items, you buy them without thinking about the data being recorded.

“It’s true because we give it away willingly without thinking about the implications. There was a section in the movie who was on parole, and it used the data to categorize who was at risk of committing a crime. There are a lot of very wide-flung implications setting themselves up on what they post on Facebook, with no information they are getting recorded, which is very alarming,” she said.

Reiser said these inferences are like a hidden business. They collect and advertise to sell to you online.

“It’s about why they are collecting our data and that is to sell us something,” she said.

Slatton ended the panel with awareness of the issue. Coded Bias as a documentary is meant to galvanize watchers into this fear of being categorized. The system can be faulty and charge you with a serious crime you did not commit based on your skin color. She called viewers to action and to take more notice.

“If you look at Coded Bias, go online and look at their website. There are several calls to actions of things you can actually do. Legislation and talking to Congress people. It’s gaining a lot of momentum and I think it’s going to get out there. The more people that see the film, done by a female filmmaker, with all these powerful females, I’m happy to see this documentary take off,” Slatton concluded.